OpenAI with Guardrail

Use Case Overview

We have created a chatbot, dividing it into two sides: the user side and the admin side. This is a simulation where the user interacts with the chatbot by asking questions, and the admin is the owner or the one who defines the criteria for the chatbot's questions.

Users can ask and answer questions just like the previous chatbot we created. However, there is a difference; they can only ask questions within the specified criteria. For example, this chatbot is set to only respond to questions related to animals. If the user asks a question unrelated to animals, the chatbot will reply that it cannot answer that question.

On the admin side, there is the privilege to set the criteria for the number of questions, and it is possible to add and clear criteria in the admin section of the chatbot, which displays all the criteria currently in use.

User Flow

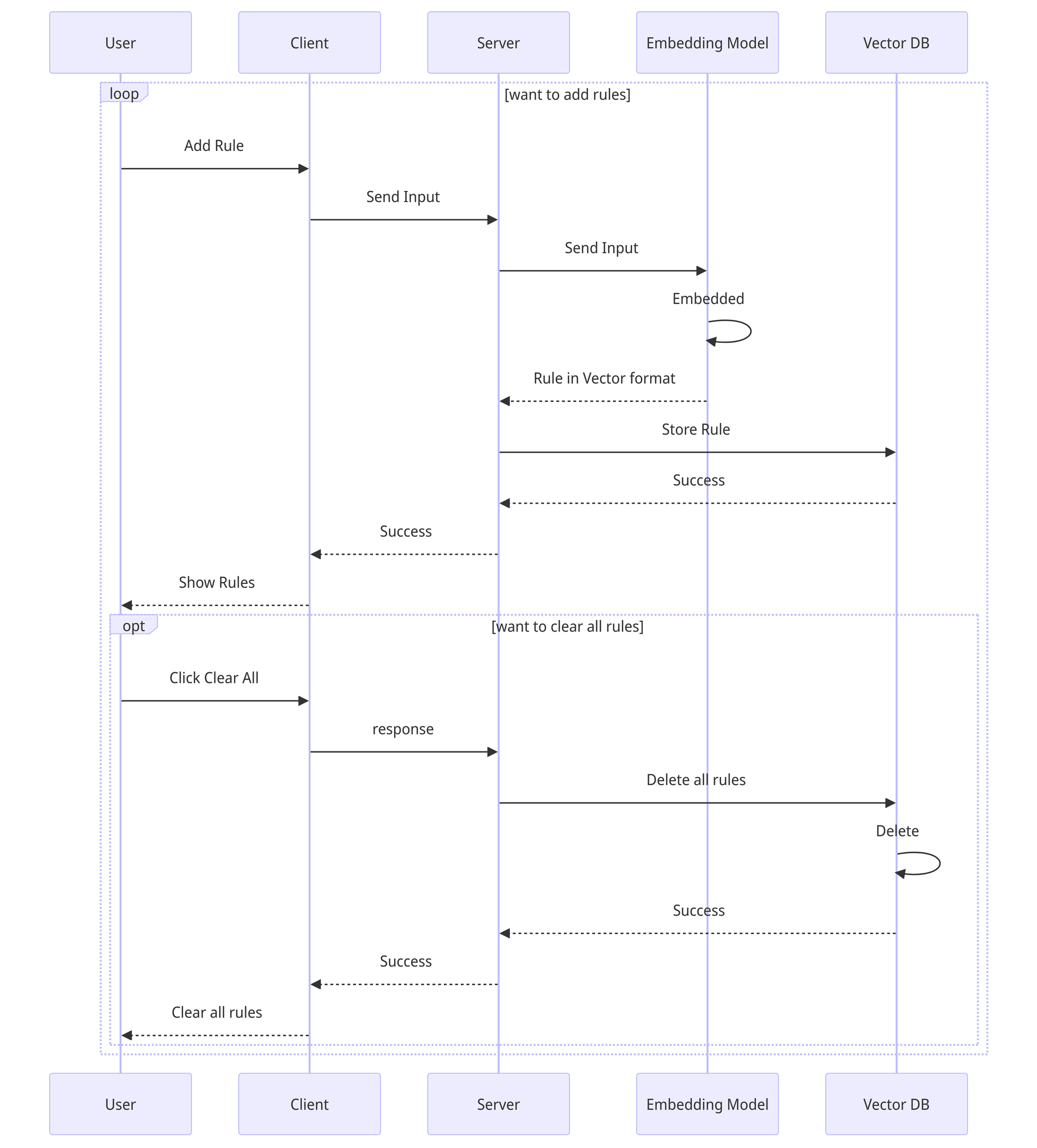

Admin

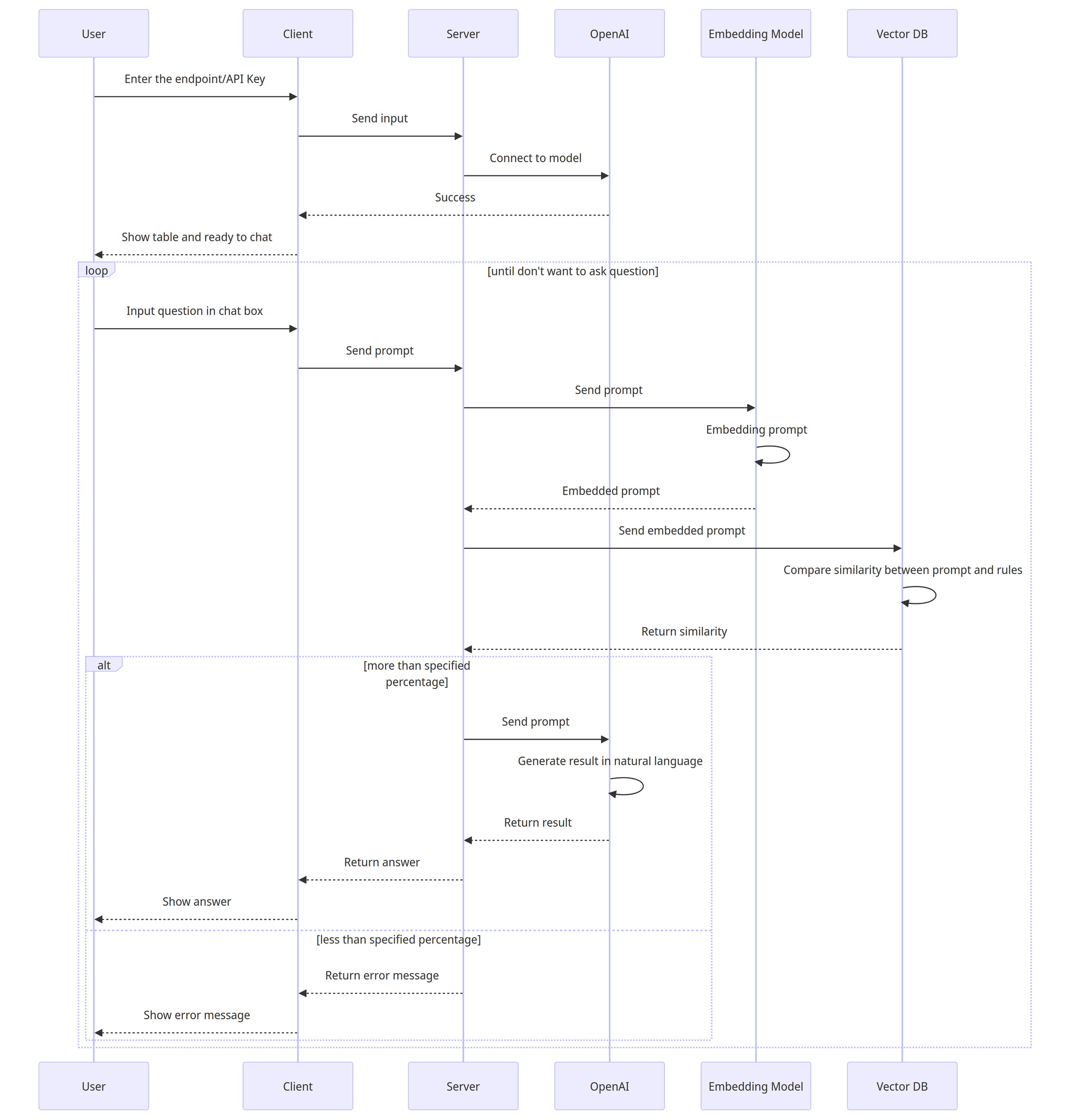

User

Frontend Development

Admin Interface for Rule Management

In designing the UI for the admin's rule management, the admin can create and delete rules and view the rules set for development. For the admin interface, React is used to manage various states. For form management, the library react-hook-form, @hookform/resolvers/zod, and zod are used for input validation. The connection to the API is handled using @tanstack/react-query, which provides various features for API interaction, such as using useQueries for fetching multiple values simultaneously.

For adding and clearing rules, useMutation is used. The advantage of this library is that it handles various states automatically. For example, after successfully adding or clearing a rule, it triggers the refetchRule function to update the table.

User Interface for Question Submission

In the implementation of the chatbot interface, there is an input section for typing messages to the AI. When a user types something unrelated to the specified rules, the API responds that it is a "bad prompt" and prompts the user to ask another question.

Now, let's see what happens when we ask a question related to cats. The AI responds by asking about the cat we inquired about. The interaction with the chatbot is done in a streaming format.

Guardrail Interaction

The Guardrail feature limits the chatbot's ability to respond to prompts based on the rules set by the admin. The Relevant Answer Generation (RAG) checks how closely the prompt matches the rules and sends it to OpenAI. If the similarity is less than 99%, the API responds with a "bad prompt" message, indicating that the question is outside the defined rules.

Error Handling and User Feedback

Error handling is implemented for server connection issues or user input errors. For example, if there's a 404 status code (not found), the session is cleared and a new session is fetched. Validation errors for user input are handled using react-hook-form, @hookform/resolvers/zod, and zod.

Backend Development

Setup FastAPI

Install the necessary Python 3 libraries for FastAPI:

Create a file named guardrail.py and initialize FastAPI:

Run the FastAPI server using the command:

This command instructs FastAPI, declared in the app.py file, to run, with the server defaulting to port 8000.

The API is divided into two parts: one for users sending prompts to the backend for interacting with AI, and another for administrators controlling whether prompts can be sent to AI.

Install other necessary dependencies:

The admin API includes functions for creating, viewing, and deleting rules for filtering prompts, essentially adding data to VectorDB. The VectorDB initialization will be discussed further.

View all created rules:

Clear all rules:

For user interaction, an API is provided to send prompts and check whether they match any rules:

Integrating OpenAI API

Connect to the OpenAI API using the API key generated from the OpenAI console. Two methods are provided: one using an Embedding model and the other using the Chatbot API.

Embedding model:

Chatbot API:

Implementing VectorDB with Chroma

Chroma is used for VectorDB, which stores vectorized data to aid in finding similar data. Initialization is done using parameters for collection name, data location, and the embedding model.

Building the Guardrail Feature

The Guardrail feature queries rule data from VectorDB based on received prompts, and if the similarity score is above a threshold, the prompt is forwarded to the chatbot.

Deploying on AWS EC2

Deployment is done using the screen utility:

Create a session with a specific name:

Navigate to the API folder:

Start FastAPI on port 8000:

Detach from the current screen session:

API Gateway and CORS Configuration

Add CORS configuration for FastAPI:

Connect this server to AWS API Gateway for authentication and usage management in each request.

Last updated