Multiple Agents

Use Case Overview

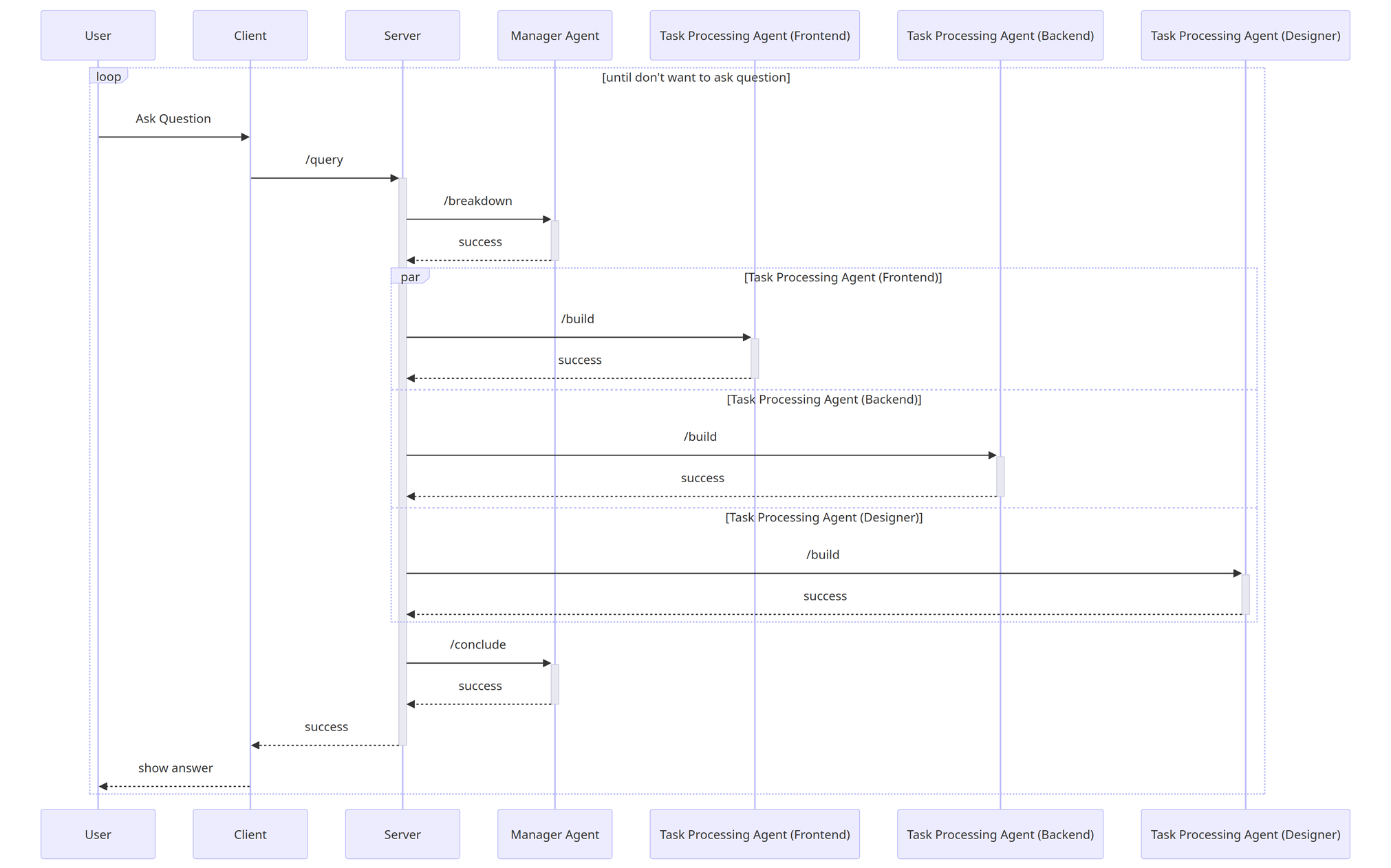

We have developed a Chatbot specialized in website development. The AI system is designed with the first AI acting as a task divider for different agents, including Frontend, Backend, and Designer. Each agent responds to questions in its respective domain. Additionally, there is another AI responsible for summarizing responses from all agents.

When a user asks a question, such as "create blog," the response includes information from Frontend, Backend, and Designer, presented in clear sections for easy comprehension.

User Flow

AI

In the realm of Large Language Models (LLMs), we continue to use OpenAI as the model for creating the Chatbot. However, we have separated it into multiple agents, each with a distinct role.

Manager Agent

The initial AI is the Manager, with two main responsibilities. Firstly, it manages the segregation of prompts from users, assigning suitable tasks to each agent. This enhances our ability to handle user requests effectively. Secondly, it collects answers, summarizes results, and organizes data from Task Processing Agents before presenting the response to the user.

Task Processing Agent

In the Task Processing segment, or AI responsible for generating responses, we have divided it into three agents:

Frontend: Generates responses for questions related to Frontend.

Backend: Handles responses in the Backend and Technical domain.

Designer: Generates responses related to UI and UX design.

These agents work based on the tasks assigned by Manager Agents. Once their tasks are complete, they send the responses back to the Manager Agent for further consolidation.

API

For the API, we continue to use FastAPI as the framework. The demo API includes the following:

/query

We have created an API that combines the usage of all agents in a single call. Frontend can call this API once when a user asks a question. Subsequently, it generates responses through various agents and displays the results in the chat without the need for multiple API calls.

The APIs called within this API include:

/breakdown: Used to break down questions into tasks for forwarding to specific agents.

/build: Used to generate responses.

/conclude: Used to collect responses and summarize them for presentation to the user.

/queryWithOutChain

For users who do not want to use multiple agents, we provide an API to connect directly to OpenAI.

Frontend Development

Implementing Input Box for User Prompts

To implement the input, we will use the react-hook-form library along with @hookform/resolvers/zod and 'zod'. In the first step, we create a schema comprising a query for input and a bot for displaying error messages related to the bot itself.

Once the schema is created, we generate a type interface for this form.

Next, we create methods:

The schema validates user input, and if the user submits without typing anything, an error message will be displayed in the user interface: 'Please enter your message.' The input component uses the 'react-hook-form' library along with useFormContext and Controller to manage input.

When using the input, set the name attribute to 'query' to match the variable declared in the schema.

When the submit button is pressed, if the input is validated correctly, we see the logged data from the onSubmit function. This data can then be sent to the backend for further processing.

Handle API Integration for Chained-LLM Agents & Display Output from Each API

To connect with the backend, we manage various states such as loading and error. We set the default base URL for the API.

After setting the base URL, we connect to the API.

We use useFormContext from 'react-hook-form' to manage the loading and errors state.

If an API error occurs, we display an error message from the bot and show the loading state. We use the state from 'useFormContext' to display values such as isSubmitting and errors.

In the ChatWindow component, we manage various states to display in the UI, including the results from the API.

Implement Process Continuation Functionality

After successfully connecting with the backend, we need to store the result message obtained from the API. We create a state to store the user and bot answers.

Now, when we ask the AI, for example, to create a blog, the LLMs agent will respond with tasks for each role, such as Design, Frontend, and Backend.

Backend Development

Setting Up a FastAPI Project

Similar to all the examples we've gone through, we choose to use FastAPI as the framework to create and use our API.

Start by installing the necessary Python libraries for FastAPI:

Create a file named app.py and initialize FastAPI:

In this example code, we initialize a FastAPI project and enable CORS for connecting with the frontend. To run the server, use the following command:

This command instructs FastAPI, declared in the app.py file, to work. The server runs on the default port 8000.

Now, let's create another file named LocalTemplate.py to store initial templates for asking questions to the Chatbot. These templates include:

Manager-template: Divides tasks for incoming questions to be used by each agent.Agent-template: Divides into three agents: Frontend, Backend, and Designer. Each agent answers questions related to their role.Conclusion-template: Summarizes all received answers for a concise overview.

In the API design section, we'll divide the API into five routes for different functionalities, which will be explained in the next section.

Developing the Manager Agent API

The first API we create is POST: /breakdown, which handles the breakdown of a customer's need into tasks for each agent:

This API takes a customer's need as input and processes it to create tasks for each agent. The resulting tasks are then returned as a JSON response.

Building Task Processing APIs

Next, we create an API POST: /build that processes the tasks for each agent:

This API takes a list of tasks and processes them for each agent (Frontend, Backend, Designer), returning the raw and JSON format of the generated tasks.

Creating a Manager Summary API

Now, we create an API POST: /conclude for summarizing all the received information:

This API takes the initial customer need and the tasks generated by each agent and produces a summarized conclusion in both raw and JSON formats.

Implementing a Chained-LLM Wrapper API

For a more streamlined process, we create an API POST: /query that combines all the steps:

This API takes a question, processes it through the entire chain of tasks and agents, and returns the raw and JSON format of the summarized conclusion.

Direct OpenAI Prompt API Integration

To have a more direct interaction with the Chatbot, we create an API POST: /queryWithoutChain:

This API takes a question, sends it directly to the Chatbot without going through the task and agent chain, and returns the raw and JSON format of the Chatbot's response.

Deploying and Monitoring on EC2

For deploying FastAPI on an EC2 instance:

Create a session with a name of your choice:

Navigate to the API folder:

Start FastAPI on port 8000:

Detach from the screen session:

Now, the server runs in the background even if you exit.

Setting Up API Gateway and Implementing CORS

To integrate the API with AWS API Gateway:

Add CORS configuration to FastAPI:

This configuration allows CORS for the FastAPI server. Connect this server to AWS API Gateway for enhanced management, authentication, and usage limitation.

Last updated